This is the second part of a three part series article.

- First article | Generative AI & The Effects on Businesses

- Third article | Embracing (Generative) AI As A Strategic Advantage

Significant risks must be addressed for all the benefits and conveniences of generative AI, including but not limited to ChatGPT. After all, getting assistance to solve a problem is one thing, but what happens when the prompt you’ve typed in accidentally leaks your company’s private information?

The recent case with Samsung brings to light the repercussions that can occur when using the services provided by a public domain. Whatever you key into the chatbot will not necessarily remain confidential, so what’s the next step to take?

Our last issue covered a brief overview of how generative AI operates, its impact on various business structures, and the pros and cons of implementing AI systems into the workplace. In this issue, we’ll dive deeper into potential risks and pitfalls surrounding the usage of generative AI to prevent problems like intellectual property theft and data protection breaches.

Data Privacy and Customer Data Protection

Due to the nature in which generative AI operates, the topic of data privacy should be thoroughly considered. AI thrives through machine and deep learning, which means it continually improves its performance as the quantity & quality of data it collects increase.

This is where the line between security and data protection gets blurred. The more data the AI accumulates, the greater the risk of leaked personal information. In addition to that, there’s also the matter of consent and transparency surrounding data collection. An article by MIT Technology Review reported that OpenAI is currently being investigated for allegedly scraping people’s data and using them without consent.

More importantly, companies need to know where consumer data goes and why. Be it an internal server, a cloud solution, or a prompt into a large language model (LLM). This is especially pertinent now that individual employees have at their fingertips a roster of free solutions that can cause—often inadvertently, sometimes sloppily—a data leakage.

What this means is simply this: if companies want to implement AI, they would need to be honest and disclose the information to consumers about:

- How their personal data is being collected

- How it’s being used and processed

- The type of data being collected

- The purposes of collecting data

- The legalities of collecting data

Secondly, companies should also acquire the consent they need from their consumers to process the data for the reasons stated. Consumers must be given a chance to say in clear terms that they are willing for their data to be subjected to processing. Other considerations that should be taken into account include:

- Applying robust security measures to prevent personal data from being misused or accessed without authorisation.

- Monitoring and auditing that the use of personal data is done in compliance with the consent granted by consumers and for legitimate reasons.

- Ensuring that the collected data follows relevant data protection regulations and laws.

Controversy Over Job Cuts and AI-Driven Workforce Changes

The concern with AI taking over jobs and losing employment in specific sectors has been an ongoing debate. We can’t ignore that the more AI technology advances, the more comprehensive the range of occupations that could be affected, and this time, it isn’t just menial jobs that are at risk. Various roles could be impacted, from tech and media jobs to legal or finance industries. According to Goldman Sachs economists, it’s been speculated that roughly around 300 million jobs globally could be replaced by AI.

While there may be losses, implementing AI in the workforce will create more job opportunities. Even though there will most likely be an oncoming shift in how jobs are performed, it doesn’t always mean that roles will be completely automated.

Instead, there is a higher possibility of creating an environment where people work together with AI rather than being replaced by them. Repetitive tasks might still be replaced, but this also means there’s more room to prioritise other areas of the job and maximise strategic decision-making.

Imagine having the time it takes for a software developer to write and debug code. Will you retrench half of the developers? Or will this be a solution to your hiring headaches? Or will you be able to use the extra capacity and extra software quality to move faster than the competition?

In any case, companies should start expanding their talent and career development training to adapt to the world of AI responsibly. Employees will not only have the chance to upskill themselves and grow professionally. They will also better understand using AI ethically while learning how to contribute to its development. (More on this in our third issue)

Intellectual Property (IP) and AI

Intellectual property rights can be tricky to navigate, considering that the ownership of the works produced by Generative AI is unclear or hard to track down. The problem arises when the AI’s training data takes direct reference from other creators’ works without the approved license.

Apart from generating unauthorised derivative works, businesses might face lawsuits based on copyright infringement. With that said, there is still the question of whether AI should be subjected to IP rights at all, especially if AI isn’t even viewed as a legitimate creator in the first place.

There are also grounds for clarification regarding the definition of “derivative works” and whether the context for fair doctrine can be applied. With all these grey areas and uncertainties lined up, companies should take cautionary measures to protect themselves in the long run. These include:

- Complying with IP laws and regulations.

- Licensing and compensating creators who own the IP rights for the data included for training development.

- Conducting IP clearance searches to prevent clashes with existing IP rights to avoid unintentional plagiarism.

- Being transparentwith their consumers on whether their protected content has been used in the training data.

- Developing an IP strategy for your own company’s IP assets to reduce the risk of third-party infringement.

Tackling Bias in AI and Ensuring Accountability

Though AI may be developed to generate content with increasingly more accurate results, it can also pick up on prejudiced biases through its training data. For instance, hiring might filter out genders for specific job roles. In 2018, Amazon had to scrap its experimental AI recruitment tool, as its system recognised male candidates as preferable and penalised resumes containing the word ‘women’ in them.

Other problematic biases can also stem from racial discrimination. In October 2019, researchers discovered that an algorithm used in US hospitals to predict which patients would likely need extra medical care demonstrated heavy favouritism for white patients over black patients.

As seen in the cases, the implication of these biases goes beyond just a simple error in a trained system. They can have the power to harm people and place certain social groups at a disadvantage. To tackle these issues, organisations should first address human and systemic biases, from which AI training data biases can stem. Here are some factors that need to be considered based on a McKinsey study:

- Identify and monitor the areas in the training data that could be susceptible to unfair biases.

- Set up a framework of technical and operational tools for testing to help reduce biases in AI systems.

- Have a discussion surrounding unconscious human biases with colleagues that may have gone unnoticed and learn how to improve on them.

- Consider how to maximise the collaboration between humans and AI fully. Identify the areas that require human-based decision-making and ones that can be automated.

- Widen the training data range available for research and take on a multidisciplinary approach to further eliminate biased results.

- Diversify the AI development team within your company.

In short, saying that “the data says so or the computer says to do so” without understanding why it does that should immediately trigger a red flag for AI bias.

Navigating Government Regulations and Compliance

Though there are currently no dedicated laws in place, proposals have been made by Singapore, the UK, Europe and many countries to regulate the usage of AI moving forward. The proposed regulations reflect each country’s ethos towards the use of AI—ranging from cautious and conservative, to progressive and pioneering. Here’s a simple breakdown for Europe, the UK and Singapore:

Europe takes on a more conservative approach by addressing the risks of specific usage of AI into four different categories:

- Unacceptable risks involve systems being banned.

- High risk refers to data involving sensitive information that would be regulated.

- Limited risks are systems that require transparency with their customers for their use of AI.

- Minimal risks are AI systems like, AI-enabled video games or spam filters that can be allowed for free use.

The UK has a very similar risk-based approach as Europe but aims to regulate the use of AI and not the technology itself. It has six cross-sectoral principles that aim to assist regulators to:

- Ensure that AI is used safely.

- Ensure that AI is technically secure and functions as designed.

- Ensure that AI is appropriately transparent and explainable.

- Embed considerations of fairness into AI.

- Define legal persons’ responsibility for AI governance.

- Clarify routes to redress or contestability.

Conversely, Singapore’s proposed policies work towards a future where AI and humans work together. They emphasise the need for the human touch in the research and development of ethical AI systems. Their proposals include the following:

Launching an AI Governance Testing Framework and Toolkit with A.I. Verify to promote transparency between companies and stakeholders while responsibly demonstrating AI. Through standardised tests, developers and owners can validate the claimed performance of their AI systems against a set of standards. A.I. Verify combines open source testing methods, including process checks, into a Toolkit for easy self-assessment. The Toolkit will provide reports covering the main aspects affecting AI performance for developers, management, and business partners.

Introduced a Model AI Governance Framework to guide private sector organisations for ethical and governance issues when deploying AI solutions.

- Provide an Implementation and Self-Assessment Guide for Organisations (ISAGO), which aims to align AI governance practices of organisations with the Model Framework.

- Form an Advisory Council on the Ethical Use of AI and Data (Advisory Council), which:

- Advises the Government on ethical, policy, and governance issues that stem from data-driven technologies.

- Guides businesses to limit ethical, governance, and sustainability risks and reduce the adverse impact on consumers fusing data-driven technologies.

At the very least, understanding how you use AI, what decisions are made with it, what artefacts it creates, and what data is trained with is a good starting point to create a capability to follow future rules.

Mindfully Incorporating AI Into Your Businesses Properly

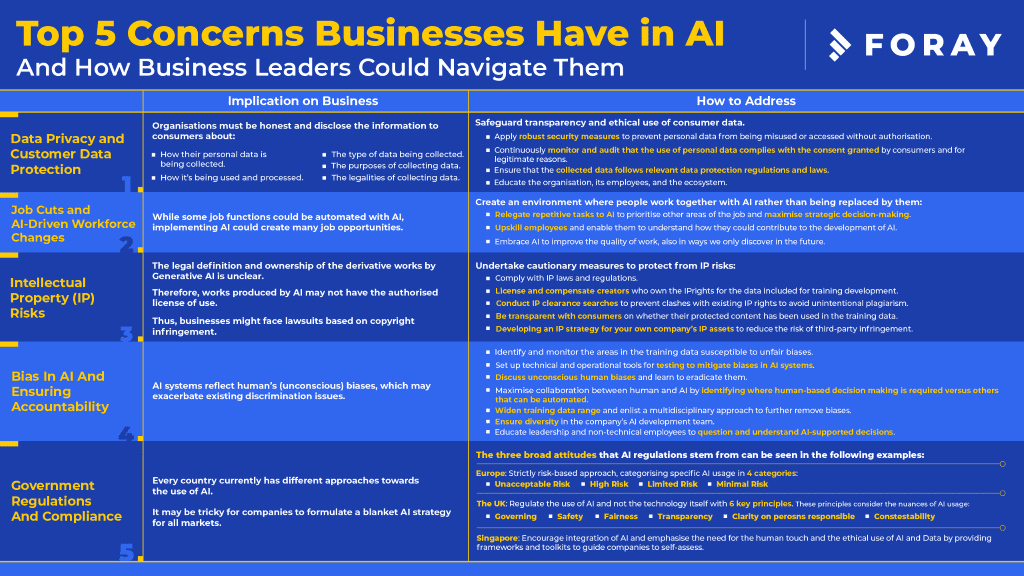

Despite these risks, the benefits of implementing AI into your business can easily counteract the issues that it brings. So long as the pitfalls are adequately addressed with the right plans for early prevention. Below is a guide sheet on how business leaders could successfully navigate these issues.

Stay tuned for our next issue, as we’ll cover more on how to put together an effective AI strategy to make it work to your best advantage. That said, if you have any comments or questions regarding managing the use of Generative AI right now, share your thoughts with me at vittorio@forayadvisory.com!

Photo by DilokaStudio on Freepik.

5.0

5.0