To gain a competitive edge, leaders must embed responsible AI across the business and align with what really matters to consumers.

In brief

- Many C-suite leaders overestimate how aligned they are with consumer concerns about AI.

- Clear communication of responsible AI practices can boost adoption by helping consumers feel more informed and reassured.

- CEOs are leading on responsible AI, but broader C-suite alignment is needed.

Artificial intelligence (AI) is advancing at breakneck speed. Businesses are racing to identify use cases, as well as integrate and scale AI across their products, services and operations. But successful deployment depends on more than just speed. Responsible AI is a critical enabler of adoption and long-term value creation.

Most companies now have responsible AI principles in place — but how well are they putting them into practice? How well do they understand consumer concerns? And how much confidence do they have that they are well prepared for the new risks emerging from the next wave of autonomous AI models and technologies?

To help answer these questions, EY has launched a Responsible AI Pulse survey — the first in a series — to provide a regular snapshot of business leaders’ real-world views on responsible AI adoption.

In March and April 2025, the global EY organisation conducted research to better understand C-suite views around responsible AI – for the current and next wave of AI technologies. We surveyed 975 C-suite leaders across seven roles including chief executive officers, chief financial officers, chief human resource officers, chief information officers, chief technology officers, chief marketing officers and chief risk officers. All respondents had some level of responsibility for AI within their organisation. Respondents represented organisations with over US$1 billion in annual revenue across all major sectors and 21 countries in the Americas, Asia-Pacific (APAC), and Europe, the Middle East, India and Africa (EMEIA).

Within this article, we also reference findings from the EY AI Sentiment Index Study, which surveyed 15,060 everyday people across 15 countries to understand global sentiment toward AI. A full methodology for the EY AI Sentiment Index study can be found here.

The first wave of this survey reveals a significant gap between C-suite perceptions and consumer sentiment. The findings also reveal that many C-suite leaders have misplaced confidence in the strength of their responsible AI practices and their alignment with consumer concerns. These gaps have real consequences for user adoption and trust, which are only likely to rise as agentic AI increasingly enters the market.

CEOs stand out as an exception — showing greater concern around responsible AI, a viewpoint that’s more closely aligned with consumer sentiment. Other C-suite leaders are half as likely to express similar concerns, potentially due to lower levels of awareness or lower perceived accountability. By tuning in to the voice of the customer, embedding responsible AI across the innovation lifecycle, and making these efforts central to brand and messaging, organisations can begin to close the confidence gap and give consumers greater assurance in how AI is being developed and deployed. The payoff? Increased trust and a potential competitive edge.

Many C-suite Leaders are Unaware of AI’s True Potential

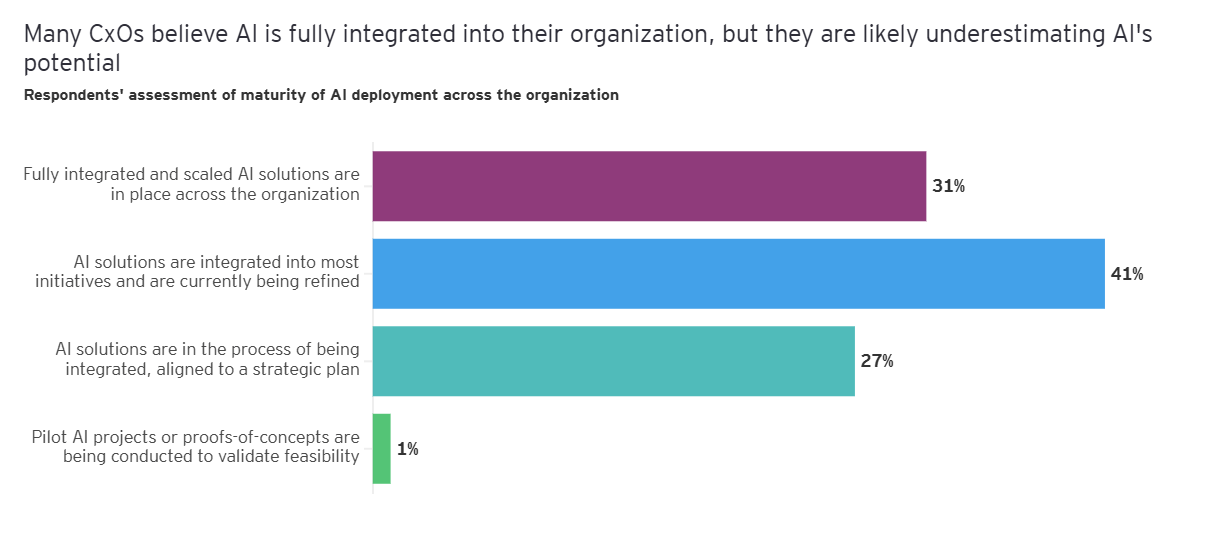

A significant share of CxO respondents — 31% — say their organisations have “fully integrated and scaled AI solutions” in place across the organisation. While this might seem high, it reflects the pace of adoption of large language models (LLMs), which are rapidly becoming part of business-as-usual across all enterprises.

It may also exhibit an imperfect understanding of the true potential of AI. Truly integrating and scaling AI across an entire organisation requires much more than technical deployment of LLMs. It involves reimagining business processes, identifying high-value use cases, and investing in the underlying foundations — from data readiness and governance to AI solution engineering, talent and change management. Forward-looking CxOs recognise this and are ramping up efforts to build the infrastructure and skills required to stay ahead.

But AI is an ongoing journey. Implemented technologies often reach a steady state, even as more advanced capabilities emerge. The next phase is already taking shape: organisations are preparing to invest significantly in agentic AI and other transformative AI models that go beyond automation to support reasoning, decision-making and dynamic task execution.

“AI implementation is unlike the deployment of prior technologies,” says Cathy Cobey, EY Global Responsible AI Leader, Assurance. “It’s not a ‘one-and-done’ exercise but a journey, where your AI governance and controls need to keep pace with investments in AI functionality. Maintaining trust and confidence in AI will require continuous education of consumers and senior leadership, including the board, on the risks associated with AI technologies and how the organisation has responded with effective governance and controls.”

CxOs are Poorly Aligned with Consumers on Responsible AI

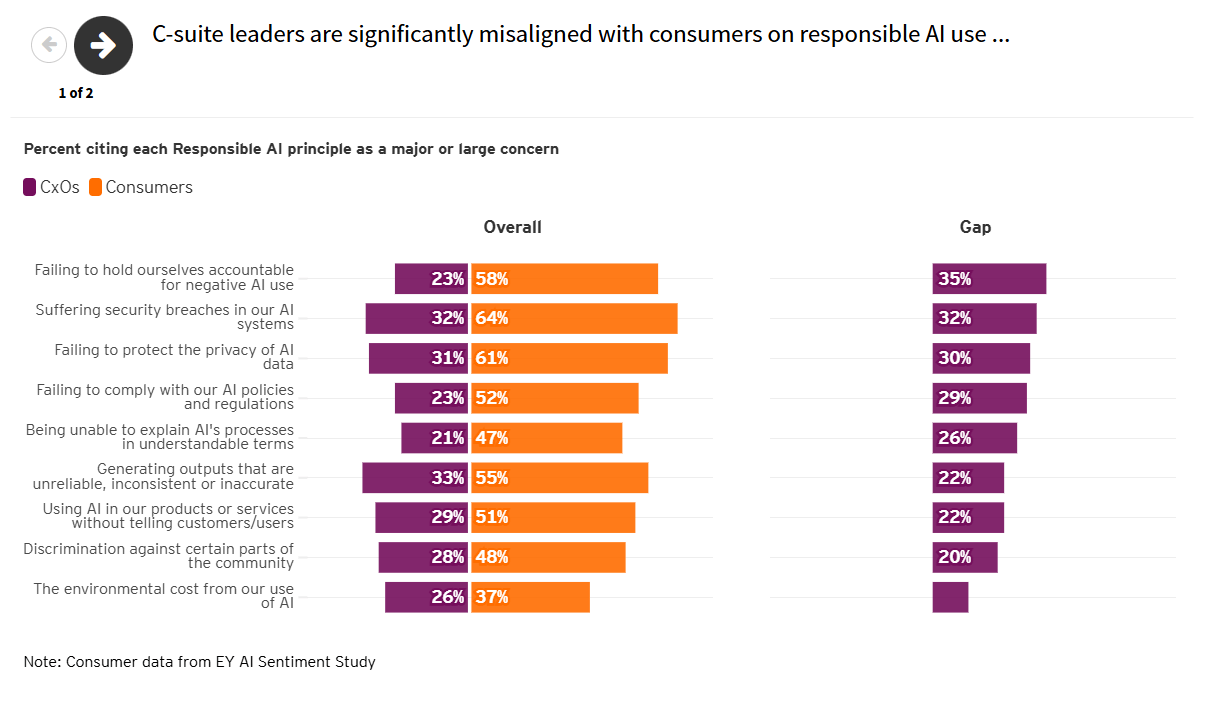

Nearly two in three CxOs (63%) think they are well aligned with consumers on their perceptions and use of AI. Yet, when compared with data from the EY AI Sentiment Index study — a 15-country survey of 15,060 consumers — it becomes clear this confidence is misplaced.

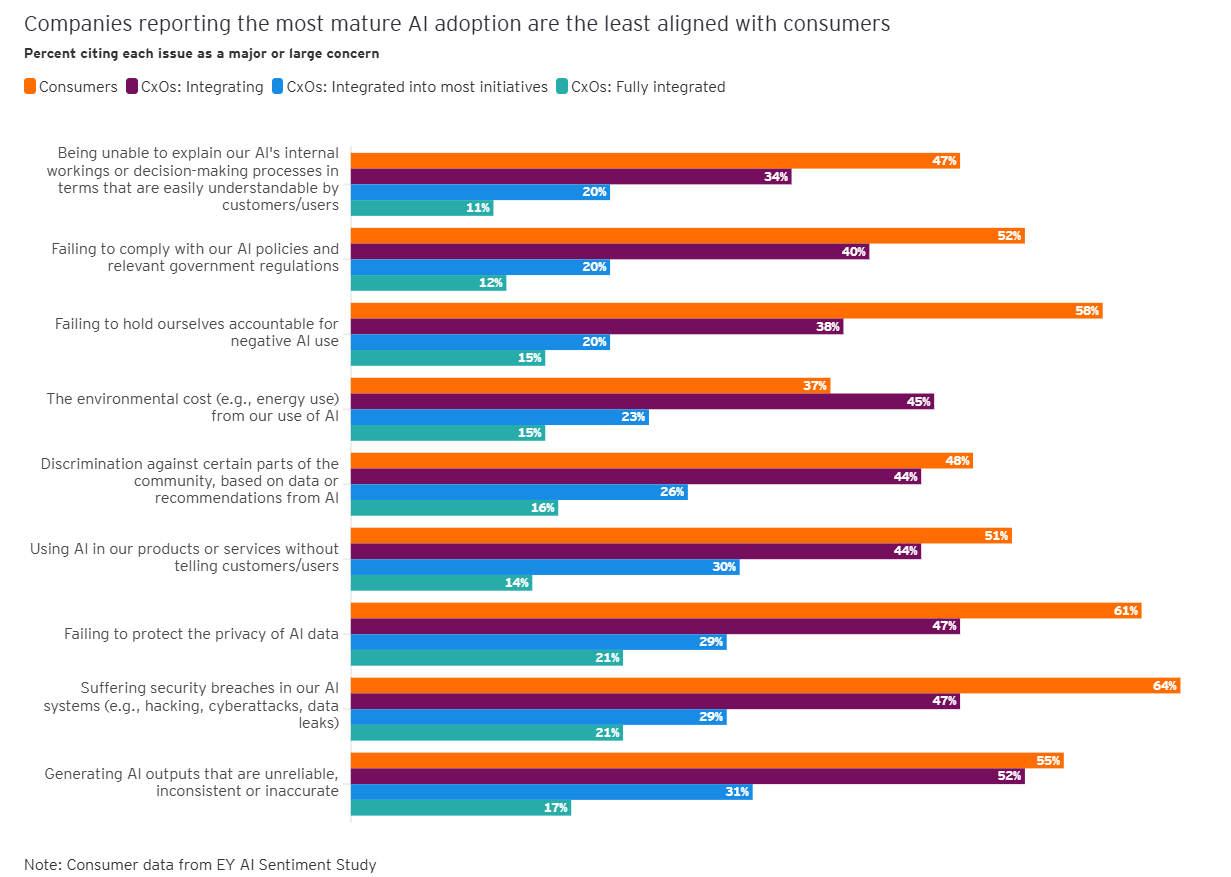

Across a range of responsible AI principles — from accuracy and privacy to explainability and accountability — consumers consistently express greater concern than CxOs. In fact, they are mostly twice as likely to worry that companies will fail to uphold these principles.

This bar chart shows misalignment between CxOs and consumers around responsible AI use and potential societal harms from AI. Sixty four percent of consumers rate suffering security breaches in AI systems as a major or large concern but only 32% of CxOs feel the same.

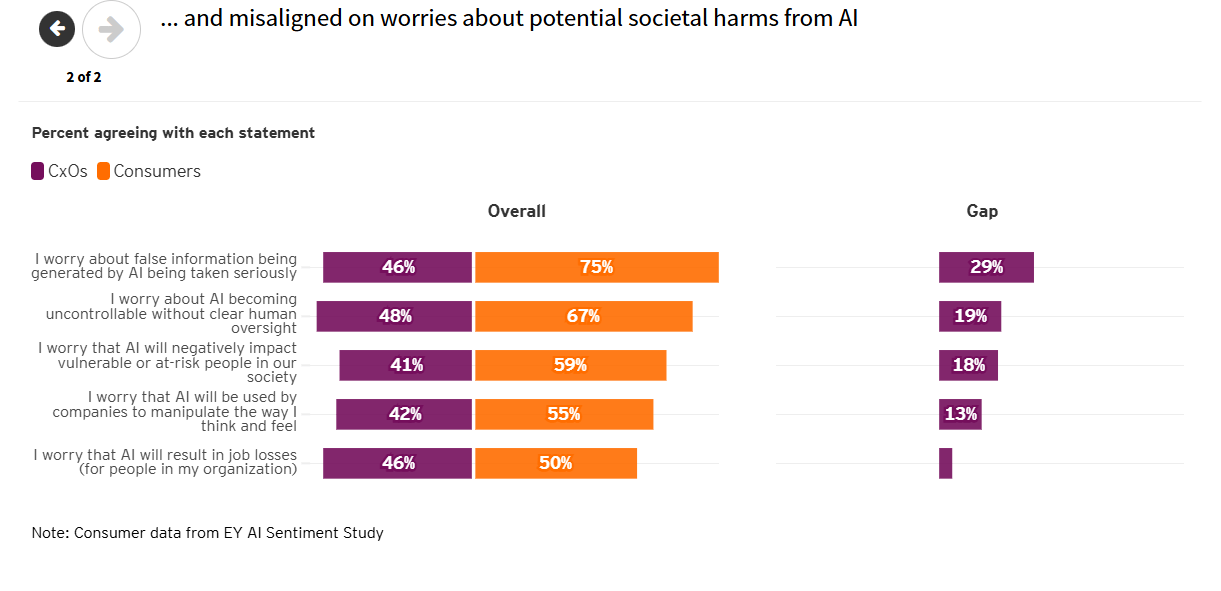

CxO respondents are also less concerned than consumers about a range of potential AI-related societal harms. Interestingly, the issue on which both groups are most aligned is job losses — and neither considers it much of a concern. This is noteworthy given how much attention the potential for AI-related job losses has received in the media over the years. Instead, consumers are more worried — significantly more so than CxOs — about issues such as AI-generated misinformation, the use of AI to manipulate individuals, and AI’s impact on vulnerable segments of society.

This misalignment may also be due to organisations under-communicating the maturity of their AI governance, their approach to managing risks, or the safeguards they’ve already put in place. Strengthening transparency around AI risk profiles and responsible innovation practices could help close this gap — helping consumers to feel more informed and reassured.

More AI, More Overconfidence?

Interestingly, companies that are still in the process of integrating AI (“integrating companies”) tend to be more cautious in assessing how aligned they are with consumer attitudes. Just over half (51%) of CxOs in this group believe they’re well aligned — compared with 71% of CxOs in organisations where AI is already fully integrated across the business.

But integrating companies show stronger alignment with consumers — particularly in their levels of concern around responsible AI. CxOs in this group express higher concern across key principles such as privacy, security and reliability — concerns that closely mirror those of consumers.

This could reflect a heightened sensitivity during the early stages of AI adoption, where governance structures and stakeholder engagement are still being shaped. By contrast, CxOs who report having more mature AI deployment may feel more confident in the strength of their controls and safeguards, and therefore express lower levels of concern.

Another potential reason for this mismatch could be that a significant portion of CxOs in the “fully integrated” cohort might have an incomplete awareness of the potential of AI. If these C-suite leaders have a low AI awareness, they may be overconfident about their alignment with consumers and even the robustness of their AI governance.

Regardless, the gap between consumer concerns and perceived alignment remains significant — and could affect public confidence in AI-enabled services. Even with robust responsible AI practices in place, if consumers don’t understand how risks are being managed, they may remain hesitant to engage. That’s why transparency and communication are critical.

Indeed, the EY AI Sentiment Index Study shows that there are significant gaps between consumers’ openness to AI and their adoption of the technology — especially in sectors such as finance, health, and public services where trust is paramount.

Newer AI models Signal Greater Governance Challenges

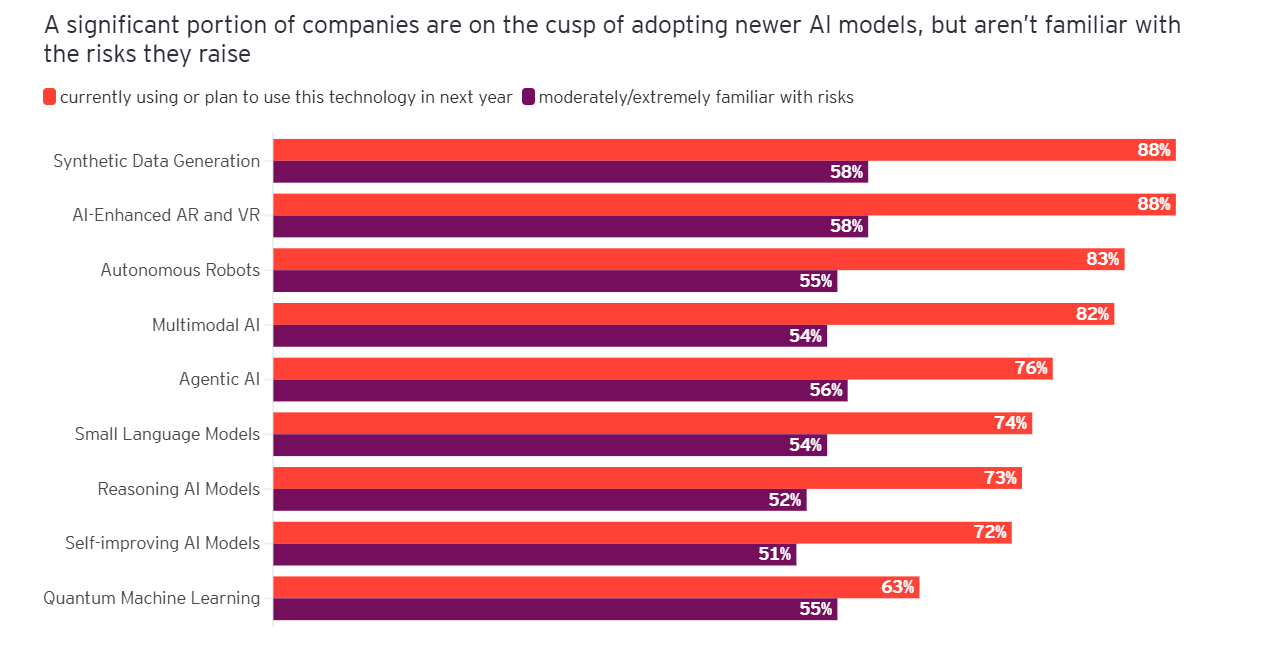

Many companies are still grappling with implementing responsible AI principles for generative AI (GenAI) and LLMs. On average, organisations have strong controls in place for only three out of nine responsible AI principles. Over half (51%) agree that it is challenging for their organisation to develop governance frameworks for current AI technologies, and the outlook for emerging AI technologies is even more concerning. Half of CxOs say their organisation’s approach to technology-related risks is insufficient to address new challenges associated with the next wave of AI.

- Accountability: There is unambiguous ownership over AI systems, their impacts and resulting outputs across the AI lifecycle.

- Data protection: Use of data in AI systems is consistent with permitted rights, maintains confidentiality of business and personal information, and reflects ethical norms.

- Reliability: AI systems are aligned with stakeholder expectations and continually perform at a desired level of precision and consistency.

- Security: AI systems, their input and output data are secured from unauthorised access and resilient against corruption and adversarial attack.

- Transparency: Appropriate levels of disclosure regarding the purpose, design and impact of AI systems is provided so that stakeholders, including end users, can understand, evaluate and correctly employ AI systems and their outputs.

- Explainability: Appropriate levels of explanation are enabled so that the decision criteria and output of AI systems can be reasonably understood, challenged and validated by human operators.

- Fairness: The needs of all impacted stakeholders are assessed with respect to the design and use of AI systems and their outputs to promote a positive and inclusive societal impact.

- Compliance: The design, implementation and use of AI systems and their outputs comply with relevant laws, regulations and professional standards.

- Sustainability: Considerations of the impacts of technology are embedded throughout the AI lifecycle to promote physical, social, economic and planetary well-being.

In fact, a significant segment of companies that are using or plan to begin using these technologies within the next year do not have even a moderate level of familiarity with the risks these technologies raise. Companies need to close this awareness gap now, to encourage greater confidence and adoption.

CEOs are Leading the Way

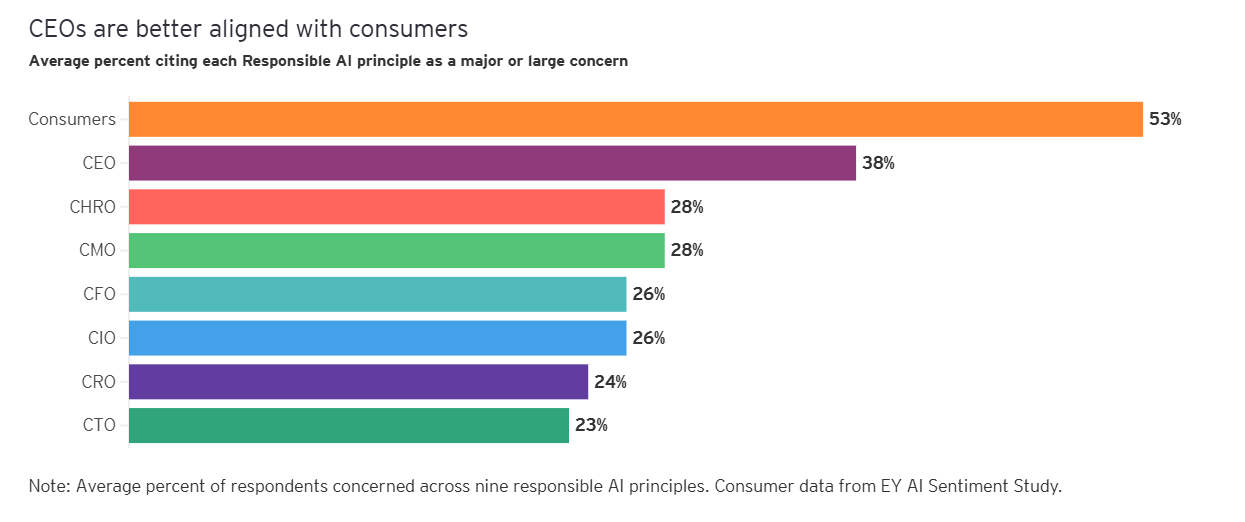

CEOs are a bright spot in the data as they stand apart from other C-suite leaders in their awareness of responsible AI issues and alignment with consumers. Among the seven roles surveyed — including CFOs, CHROs, CIOs, CTOs, CMOs and CROs — CEOs are:

- Best aligned with consumers on responsible AI concerns

- Least likely to overstate the strength of their controls

- Among the most familiar with emerging technologies and their risks (second only to CIOs and CTOs)

This likely reflects their broader mandate — everything rolls up to the CEO — including AI. Fifty percent of CEOs say they have primary responsibility for AI, more than any other role except for the CTO and CIO. CEOs are also more customer-facing than the other C-suite officers surveyed, with the possible exception of CMOs, making them better aligned with consumer concerns.

AI is a pervasive technology, with implications for every aspect of the enterprise and its business model. The CEO’s balanced view of innovation, risk and stakeholder needs puts them in a strong position to champion responsible AI — and to help their peers across the C-suite do the same.

Three Actions for CxOs: Listen, Act, Communicate

What can CxOs do to act on these findings? We suggest three key actions:

- Listen: Expose the entire C-suite to the voice of the customer

Consumers have sizeable concerns about the responsible use of AI and companies’ adherence to these principles. Leaders across the C-suite need to develop a better awareness of the concerns and preferences of their customers. Critically, this includes not just leaders with defined market-facing roles, such as the CEO or CMO, but also those that have traditionally functioned in more of a back-office capacity, such as CTOs and CIOs.

This can happen in several ways. Structure opportunities to connect dots across the C-suite, allowing non-customer facing leaders to learn from market-facing executives. Even better, put officers such as CIOs, CTOs and CROs in customer-facing situations. Expose them to customer feedback and surveys. Have them participate in focus groups. Wherever possible, place them directly in customer settings — for instance, by making it mandatory for all C-suite leaders in healthcare companies to spend a few days a year interacting with patients in a hospital or clinic.

2. Act: Integrate responsible AI throughout the development process

Responsible AI needs to be part of the entire AI development and innovation process, from early ideation to deployment. Technology designers already use practices such as human-centric design and A/B testing to optimise the user experience. Build on these best practices to make “human-centric responsible AI design” an integral element of your end-to-end AI innovation. Go beyond regulatory requirements to develop robust approaches that address the key risks and customer concerns raised by your AI applications and use cases.

Newer AI models such as agentic are already here. Others will follow, and recent history suggests they may arrive sooner than assumed. It’s important to understand how these emerging models will create new risks and AI governance challenges and start identifying ways to address them now.

Continually upskill yourself and your teams — through internal training, attending industry conferences, or engaging with external experts — to stay ahead of emerging and heighted risks as these technologies continue to advance.

3. Communicate: Showcase your responsible AI practices

Responsible AI is more than a risk management or compliance exercise. The data suggests it can be a competitive differentiator. Consumers have real worries about AI, and if they don’t trust your systems, they won’t use them — ultimately hurting companies’ revenues if not addressed.

But this also suggests a market opportunity. In the absence of transparent information about how companies are addressing their worries, consumers view companies in a uniform and undifferentiated way. By taking the lead on responsible AI and making it a centerpiece of your brand and messaging, you can stand out in the eyes of your current and potential customers — and position yourself ahead of the competition.

Summary

To gain a competitive edge, CxOs must prioritise customer concerns, embed responsible AI across the development cycle, and communicate how AI risks are mitigated. Understanding consumer views and integrating responsible AI at every stage of innovation is key. This approach will help businesses build confidence in their AI applications, ensuring they align with consumer expectations and mitigate risks associated with emerging AI technologies. By doing so, organisations can position themselves as leaders in responsible AI while creating long-term value.

The article was first published by EY.

Photo by Andres Siimon on Unsplash.

5.0

5.0