Famously, in 2008, the Queen asked economists why no-one saw the great financial crisis coming, not least as they were giving her a full retrospective inquest into why it happened1. She might well ask the same question again now about the COVID-19 pandemic. Of course, some commentators did predict the likelihood of a similar pandemic. Bill Gates, not a renowned epidemiologist, in 2015 said; “If anything kills over 10 million people in the next few decades, it’s most likely to be a highly infectious virus…not missiles, but microbes”.2

We know about the failure of governments and health authorities to prepare for this pandemic, but does this excuse companies from a similar failure to face up to this risk? Why didn’t we see at least the risk of this coming? With all the effort, we put into company risk models, how did we miss the greatest blow to global commerce since the last world war?

Was it a black swan?

There has been much talk of this as a black swan event.

“A black swan is an unpredictable event that is beyond what is normally expected of a situation and has potentially severe consequences. Black swan events are characterized by their extreme rarity, their severe impact, and the widespread insistence they were obvious in hindsight.”3

Whilst we can agree that the COVID-19 pandemic has had a severe impact, we can’t really console ourselves that new viruses outbreaks are extremely rare. There have been many since the great 1918 flu pandemic. The World Health Organisation4 lists 20 current pandemic and epidemic diseases, including Ebola, flu, MERS, SARS and Zika. Whilst the spread of COVID-19 has exceeded all the others bar flu, it fortunately has a lower death rate than most of the others.

The risk of a new virus is therefore high (most risk models would call it ‘certain’ now). The impact of this one has also been unusually large, but it should not have been surprising. It is an example of what Bazerman and Watkins call a ‘predictable surprise’5. The governmental reaction across the world has been unprecedented and extreme. However, in retrospect, this too was a predictable surprise6. It is the shutdown of large parts of the economy and trade, rather than the direct effect of the virus itself, that has caused the major unforeseen damage to companies.

A failure of corporate risk management?

There are three key reasons why risk management fails;

- You don’t think of the risk in the first place, or believe that it is so unlikely that it’s not worth even considering.

- You recognise the risk, but the impact, when it happens, is worse than your worst case.

- You acknowledge the risk and the possible impact, but you don’t do anything about it.

I don’t suppose many company risk committees have spent much time debating whether a pandemic would happen. On the other hand, companies will have debated the risks of a downturn in revenue or not getting access to offices and other work locations. However, not many will have modelled the impact of revenue going to zero or work-places being closed for months. Even then, how many boards would have actually had – or could have afforded – a realistic plan to respond to such extreme outcomes?

The people vulnerabilities in risk management

There is much written about the importance of good process in risk management7. However, a process is only as good as the human inputs it gets, and psychology has plenty of reasons why humans fail to appreciate risks. Here are a few of the problems we face;

Optimism bias – apart from a few Cassandras (not a common feature in board directors outside of CFOs), people generally look on the bright side and believe that things will work out in the end. In the early days of the current pandemic, most of us just thought it would be ok for us one way or another.

Confirmation bias – people tend to look for, and apply more weight to, evidence that supports their pre-existing views and frequently ignore contradictory. Many were reassured by the early reports of low death rates, without looking hard at the contrary evidence of spread, misreporting of data or the maths of exponential growth.

Present-day bias – we are much more influenced by recent events. The 1918 flu pandemic is beyond living memory. At least this bias means that risk models will now include pandemics!

Distance bias – Famously, Chamberlain described the Sudetenland dispute in 1938 as a; “quarrel in a far away country, between people of whom we know nothing”8. And we know how that turned out. This pandemic started in China, which is even further away. We would have reacted much quicker if it had started in Smithfield market in London.

Self-serving bias – The possible risk was too dreadful for companies to consider and the actions necessary to protect the business would have damaged both employees and shareholders.

Simplicity bias – Given different scenarios and explanations, people tend to prefer the simple one9. This is perhaps demonstrated in extreme form by President Trump’s reactions to the pandemic.

Group thinking – It takes some courage to think differently from the crowd; herd instinct rather than herd immunity. An annual report risk model with a significant chance of a pandemic closing the business for months would have been laughed at a few months ago.

Normalcy blindness – This is also labelled the ostrich effect or negative panic. It is the tendency of people to think that things will carry on broadly the way they are at the moment. This is the mother of complacency. Who could have conceived of the current lock down till it actually happened?

What can we learn for corporate risk management?

Be aware of our biases:

- The first step to overcoming a bias is to recognise it and boards should explicitly challenge themselves about whether they are fully compensating for their own biases.

- Boards should always consider carefully the worst case and stress test itself against how bad does the scenario need to be to threaten the company’s survival. There is likely to be a new movement to force companies to put these scenarios in the Annual Report (ie when the FRC wakes up to it). Daniel Kahneman’s suggestion is that you conduct a premortem, ie before you start a project, assume that it has all gone terribly wrong and then work out what would have happened to cause this10. It has the great virtue of legitimising, and indeed forcing, worst-case discussions, without people feeling that they are being unnecessarily miserable.

- Independent evidence should increasingly be used to verify risk assumptions and estimates. Estimating the probability of an event happening would be greatly helped and substantiated by some external references. Non-executive directors are well-placed to offer some of this, and should ask for some independent confirmation or view as well.

- Boards should not use other company’s risk models as a guide as this promotes group thinking. Benchmarking can be a trap for the lazy!

- Companies should think of the world as one global market place, and assume that something that happens even a long way away has a real chance of happening to their business.

- Boards need to resist the simplistic explanation and action. This is often evident, for example, in risk mitigations, which tend to be bland and simplistic.

- Full and thoughtful use of risk management processes should be reinforced, and used as a forcing mechanism for debate and to try to reduce the impact of human biases in judgements7.

- The board diversity plan might well consider the need for having at least one pessimist (apart from the CFO), on the basis that there will already be plenty of optimists.

Other implications of risk for the board

- Boards need to be even more aware of self-serving bias when receiving performance reviews, risks and scenarios from management. This doesn’t mean that management is being potentially dishonest or improper. It’s just that they inevitably suffer from biases, just like anyone else. This was very evident in the 2008 Great Financial Crisis, where many executives were slow to realise that their world had changed for ever.

- Company’s risk appetites should be reviewed again. Companies should decide how many months of shutdown they want to be able to survive. The UK CAA recommends that airlines keep 3 months costs in cash resources, ie to sustain a three month shutdown. Should other companies strive for this too, and indeed is that enough now for airlines?

- Boards should look to discuss their risk appetites with large shareholders, as there is inevitably a cost to more caution and shareholder support will be needed. I suspect that we will get a much more sympathetic hearing now than we would have done a few months ago.

- Remuneration Committees should be thinking more about risk, and what would they would do if certain risks crystallised. There needs to be a balance between the demands of executives and the best interests of the company in unusual circumstances. The latter must take account of both investor and public reactions to executive rewards. For example, if a company is taking furlough money in exceptional circumstances from the government, should it also regard this as an exceptional circumstance in restricting remuneration of its executives? This thinking might need documented in advance in future in Remuneration Strategies and individual employment contracts.

- Legal departments should be looking anew at all contracts and insurance contracts to see that they give some protection in exceptional circumstances. We have already seen how some force majeure clauses do not include these exceptional times. We may need to explore ‘sharing the pain’ clauses, as not every party can protect itself against business shutdowns by passing the costs on to its suppliers.

Conclusion

Business didn’t see the pandemic risk coming that could cause such commercial disruption. Most governments seem to have understood the risk, but chose to do little to prepare for one. All of this reflects a common failure of risk management. Even if the risk management process is operating correctly, it still relies on human judgement. We need to put more explicit focus on countering our human biases and judgemental failings in risk management. There are things we can do. We need to recognise and counter our human failings in assessing risk. We then need to build in rigorous risk management processes that themselves help to force out the underlying assumptions and thinking.

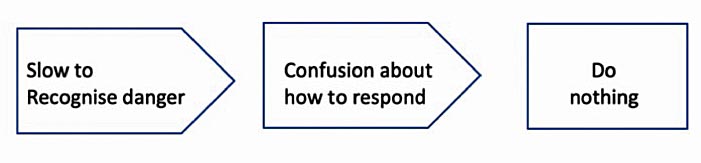

Of course, recognising the full risk is one thing. Actually, doing something about it is another. You can summarise most governmental reactions as;

In the UK government, this failure was illustrated in its reaction to both its own risk assessment and the eventual emergence of the coronavirus in China. “We just watched. A pandemic was always at the top of our national risk register – always – but when it came we just slowly watched.”, according to a government insider11.

This is clearly not a chain of failure that companies would want to replicate.

Finally, there is one more bias that we haven’t covered yet, but will be familiar to most of us: size bias. There’s an obvious reason why, in Bill Gates’s example missiles have been worried about much more than microbes. It’s difficult to believe that something so small12 can lay low most of humanity. Sometimes, it’s even the smallest things that catch us out.

Notes

- Briefing at the London School of economics in 2008

- Bill Gates TED talk 2015

- Investopedia

- WHO website

- Bazerman & Watkins HBR April 2003

- Great article on why we fail to provide for disasters from Tim Harford in the FT

- For example, see some ideas in Risks & the boardroom from my blog

- Speech to the nation by British Prime Minister Neville Chamberlain in a broadcast in September 1938. The Nazis invaded the Sudetenland and Hitler, emboldened by the lack of response from Britain and France, then conquered Czechoslovakia and invaded Poland, sparking World War Two.

- Often misusing Occam’s Razor, thinking that the simplest solution is most likely to be the right one.

- Definitely worth a read: Daniel Kahneman ‘Thinking Fast and Slow’

- Insight in Sunday Times 19 April 2020. This excoriating article displays the government and its advisers suffering from every single people vulnerability described above.

- About one thousandth of a millimetre

5.0

5.0